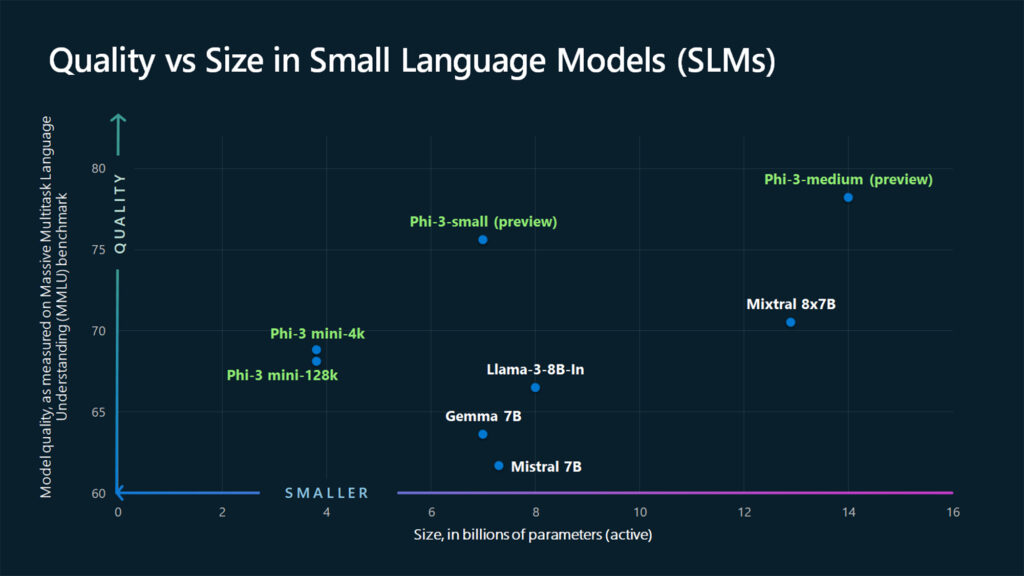

Exciting updates for AI developers and businesses: the fine-tuning of Phi-3 mini and medium models is now available in Azure, making AI even more customizable and accessible. Phi-3, a Small Language Model (SLM), offers the power and flexibility of an LLM (Large Language Model) but can run on mobile devices and within applications, providing similar benefits without the need for massive cloud infrastructure.

One of the standout features of Phi-3 is its ability to become authoritative on trained subjects. This optimization allows it to deliver expert-level responses in specific areas. Additionally, Azure AI Studio has rolled out a model-as-a-service option, allowing Phi-3 models to be deployed serverless. This means you can leverage the power of Phi-3 without building or managing the underlying infrastructure—enabling faster deployments and easier scaling.

With the addition of Phi-3 models to the Azure catalog, the total number of models now exceeds 1,600. For those interested in the full details of these new offerings, check out the official announcement on the Azure blog.

Why This Update Matters

The availability of Phi-3 fine-tuning and new deployment options in Azure is a game-changer for developers working with AI:

- SLMs vs. LLMs: While LLMs like GPT-4o are incredibly powerful, they require substantial compute resources, making them mostly cloud-dependent. In contrast, SLMs like Phi-3 offer nearly the same performance in many tasks but without the overhead, making them ideal for mobile and edge devices.

- Fine-Tuning with Expert Data: LLMs contain a broad range of information, but that can sometimes include inaccuracies. With Phi-3, fine-tuning allows developers to train the model on expert data, improving the accuracy and relevance of its responses.

- Accessibility for Developers: Phi-3 has already proven to be one of the best-performing SLMs on the market. The new enhancements make it easier for developers to integrate this powerful AI tool into their applications, without needing to worry about costly infrastructure setups.