Edge Computing – Examples using Google

Interestingly enough, edge computing typically is related to an IoT project; IoT solutions become Big Data problems (volume, velocity, variety, veracity, and value) that are compounded when blending other IT and 3rd party data sources. Since the amount of data is too much to make sense of, IoT solutions become data science projects. Effectively using data science techniques will drive the performance of enterprise analytics, which includes many of the most important analysis done in an IoT solution.

If the root of what you are trying to solve is big data and data science shouldn’t you consider using a platform that was built specifically for these types of problem statements? Shouldn’t you consider a platform that was built and used by the same company that manages more data then anybody/everybody else on the planet? If data science is your game shouldn’t you consider using a platform that was built by the company that created the fastest growing AI framework, which is also the core of the platform (Tensorflow)? I hope you answered yes to all of the above. Having said that, I’ll outline an example of Edge Computing using Google Cloud Platform below.

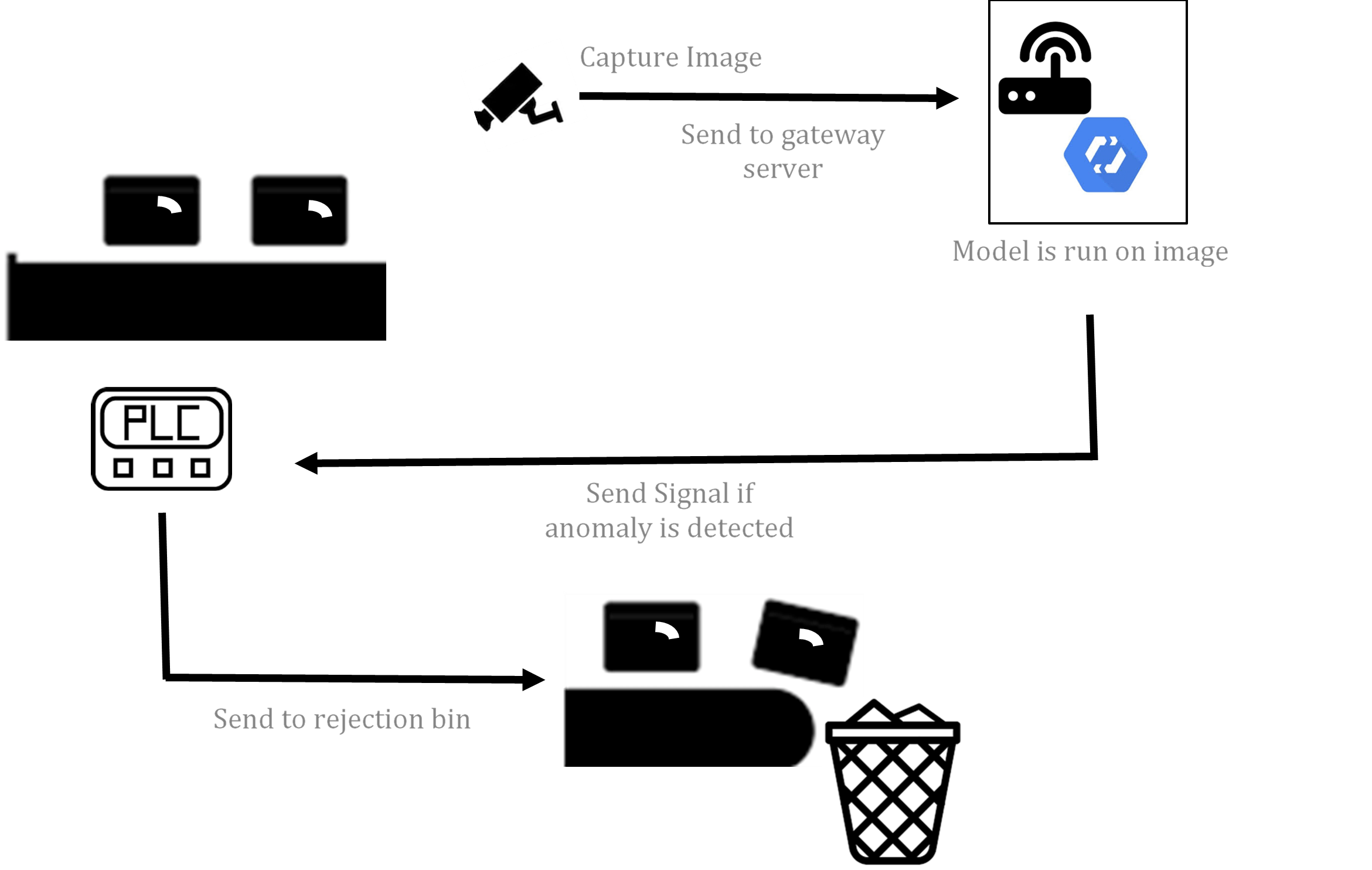

The use case is to do camera based surface anomaly detection on widgets at each gate in the assembly process. The goal is to reduce the number of quality issues that make it through full assembly and reduce/eliminate manual inspection at the end of the assembly line. If an anomaly is detected a signal is sent to the PLC and the widget is automatically routed to a reject bin.

In this hypothetical use case we will use cameras mounted at different locations of the assembly line, specifically at the end of each assembly process. The cameras themselves will act as an edge device, but are connected to the Gateway (Fog Node). The gateway server is running a TPU allowing us to train a deep learning (vision) model on the cloud and move the model to the Fog.

* For this to work the Gateway server will need to have custom code on it to send a signal to the PLC

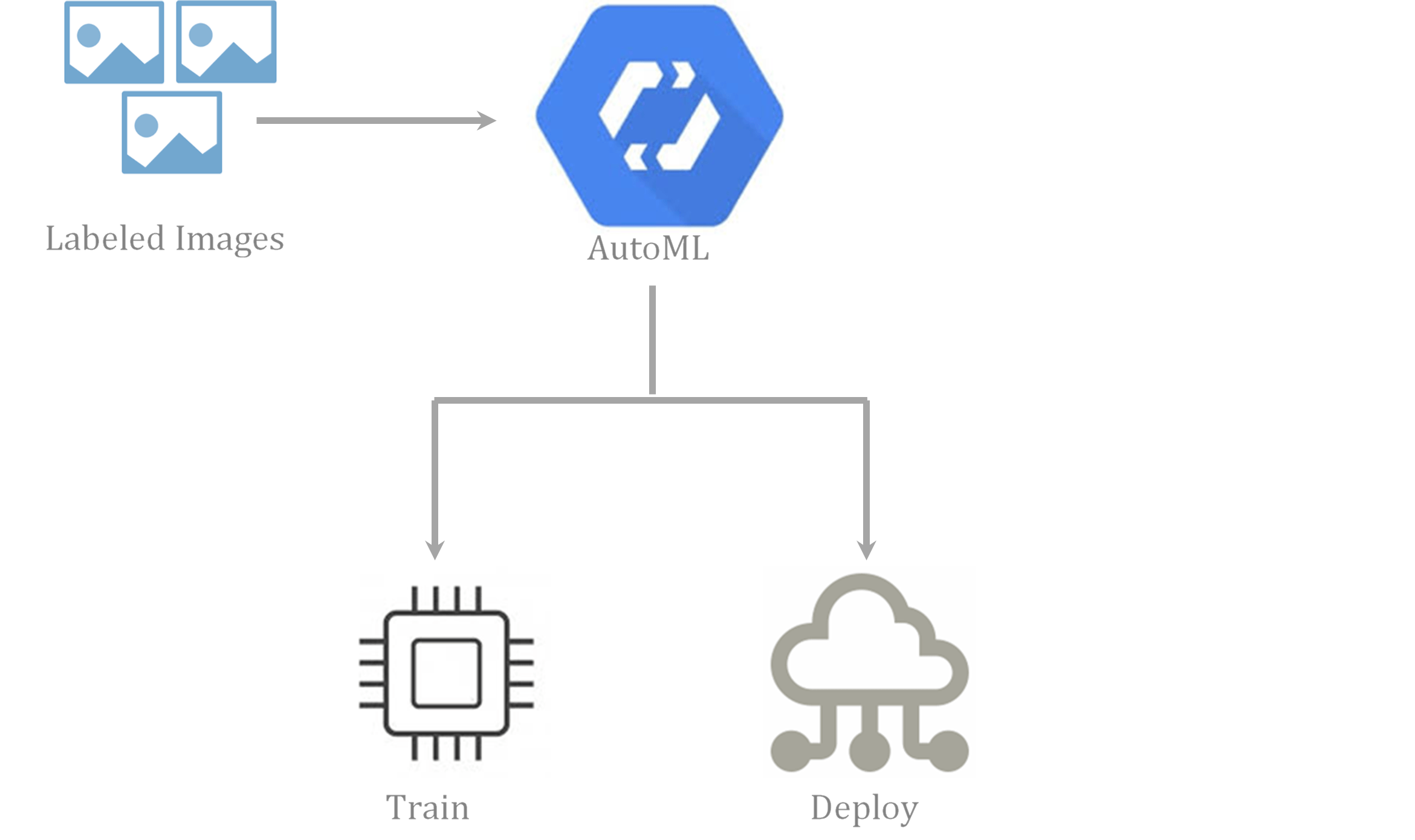

The following is an illustration of the services and flow of training a deep learning model using Google’s AutoML Service:

- Collect several images of the widget with both no surface level anomaly and with surface level anomaly and label them as such.

- Feed them into the AutoML Service, which will auto train the model without any coding or data science involvement

- Deploy the model to the Fog/Gateway Server

Potentially you could use more Google Cloud Services:

- After each rejection the application running on the Fog/Gateway server should send the image to the cloud along with a signal

- The image should be uploaded into Google Cloud Storage for review, and so the model can be retrained

- The rejection signal sent to the cloud should flow through Google Cloud IoT Core, along with any other PLC and/or Sensor data being collected from the assembly line for other types of analysis

- Cloud functions can be used to integrate and “push” data to back office IT systems like ERPs. For example you may want to generate a work order if there appears to be a severe quality issue based on the number of rejections and/or reduce the number of expected inventory.