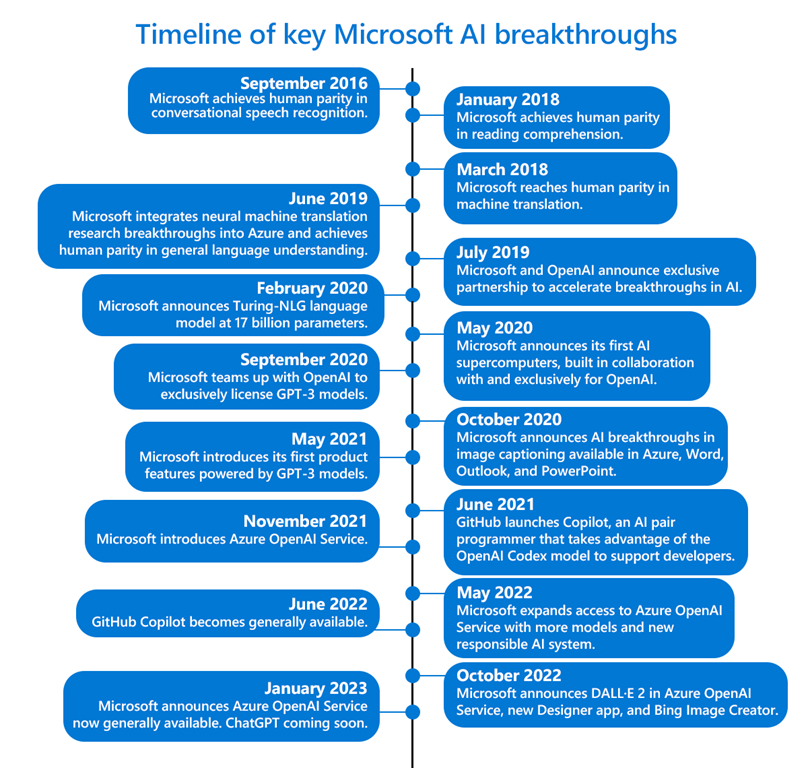

OpenAI capabilities like ChatGPT are now part of the Azure platform. OpenAI and Microsoft have brought the OpenAI APIs into Azure providing the power of tools like ChatGPT with the protection, controls, and security of the Azure platform. This allows organizations to build solutions that use the power of ChatGPT without their data being used to further train the ChatGPT public models. The current release of the Azure OpenAI Service includes the GPT-3 series, the Codex series, and the Embeddings series. It does not yet provide the DALL-E components. Currently customers interested in using the Azure OpenAI Service must apply for access and submit the business case being solved. Once approved, the service will be whitelisted for the specific Azure subscription requested. Details on this release can be found here.

Why This Matters

- While organizations could use the OpenAI APIs available directly from OpenAI, any data going against these APIs can be used to further train those models. This limits what an organization might send into the service and the potential value. The Azure OpenAI Service provides all of the same power of OpenAI, but in a secure, protected, and Azure-native experience.

- The initial focus areas of the service are based on chat, summarization, natural language, and code scenarios. These could be used to create a more powerful bot experience, rapidly convert code from one language to another (for example, Python to C#), or rapidly review and summarize massive amounts of data.

- As additional OpenAI capabilities are released, those too, will arrive in Azure bringing the power of tools like DALL-E to more organizations.

- Due to the concerns on how these services might be used, Microsoft is carefully controlling access and requiring more detailed business cases to unlock access to this service.